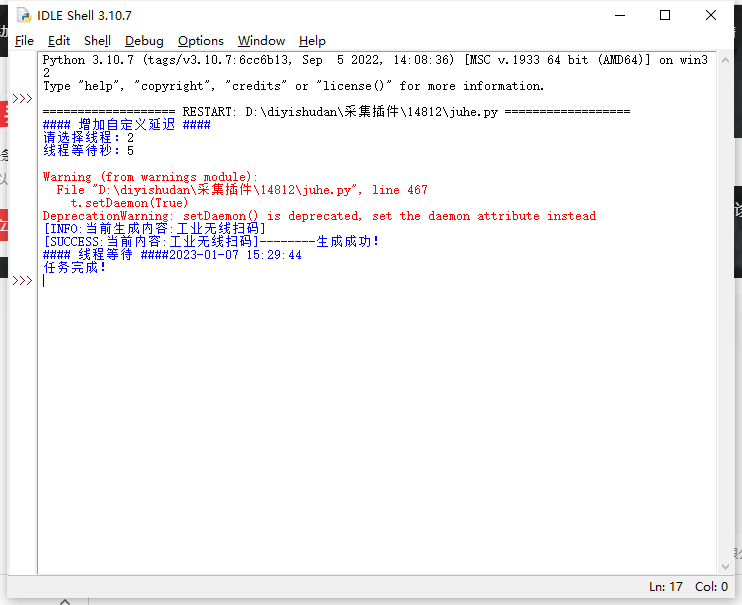

python版问答聚合采集工具源代码+反编译完美还原

该工具可以通过头条搜索、百度下拉结果、搜狗下拉、百度知道搜索、新浪爱问、搜狗问问来进行组合标题答案构成文章。

该python文件已反编译完美还原源代码,亲测有效。python问答聚合采集。

源代码如下:

import configparser

import json

import threading

from queue import Queue

from urllib import request

from urllib.parse import quote

import os

import random

import re

import requests

import time

from lxml import etree

config = configparser.RawConfigParser()

config.read('peizhi.ini')

ZHANGHAO = config.get('KUANDAI', 'ZHANGHAO')

MIMA = config.get('KUANDAI', 'MIMA')

IP = int(config.get('KUANDAI', 'IP'))

KD_QUEUE = Queue(1000000)

web_ck = config.get('KUANDAI', 'web_ck')

FOREGROUND_RED = 0x0c # red.

FOREGROUND_GREEN = 0x0a # green.

FOREGROUND_BLUE = 0x09 # blue.

CHA = ''

with open('替换词库.txt', 'r', encoding='utf8') as (f):

tihuan_list = f.read().split('\n')

def connect():

cmd_str = 'rasdial %s %s %s' % ('宽带连接', ZHANGHAO, MIMA)

os.system(cmd_str)

print('拨号')

time.sleep(2)

def disconnect():

cmd_str = 'rasdial 宽带连接 /disconnect'

os.system(cmd_str)

print('断开链接')

time.sleep(2)

def get_connect():

header_baidu = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/69.0.3497.100 Safari/537.36'}

try:

code = requests.get('http://apps.game.qq.com/comm-htdocs/ip/get_ip.php', headers=header_baidu,

timeout=5).status_code

if code != 200:

connect()

else:

disconnect()

time.sleep(5)

connect()

except:

disconnect()

time.sleep(5)

connect()

def get_toutiao_urls(wd):

url = 'https://so.toutiao.com/search?keyword=' + wd + '&pd=question&source=search_subtab_switch&dvpf=pc&aid=4916&page_num=0'

headers = {'Cache-Control': 'no-cache',

'Connection': 'keep-alive',

'Cookie': web_ck,

'Host': 'so.toutiao.com',

'Pragma': 'no-cache',

'Referer': 'https://so.toutiao.com/search?keyword=seo&pd=question&source=search_subtab_switch&dvpf=pc&aid=4916&page_num=0',

'sec-ch-ua-mobile': '?0',

'Sec-Fetch-Dest': 'document',

'Sec-Fetch-Mode': 'navigate',

'Sec-Fetch-Site': 'same-origin',

'Sec-Fetch-User': '?1',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'}

res = requests.get(url=url, headers=headers)

res.encoding = 'utf8'

title_list = re.findall('"title":"(.*?)",', res.text)

title_list = [i for i in title_list if '\\' not in i]

url_list = re.findall('"url":"(.*?)","', res.text)

url_list = [i for i in url_list if 'wukong' in i]

return [i.replace('http:', 'https:') for i in url_list], title_list

def get_wukong_content(url):

headers = {'accept-encoding': 'gzip, deflate, br',

'accept-language': 'zh-CN,zh;q=0.9,zh-TW;q=0.8,en-US;q=0.7,en;q=0.6',

'cache-control': 'no-cache',

'pragma': 'no-cache',

'sec-ch-ua-mobile': '?0',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'none',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'}

try:

res = requests.get(url=url, headers=headers)

if res.status_code == 200:

res.encoding = 'utf8'

wenzhang_list = \

json.loads(re.findall('INITIAL_STATE__=([\\s\\S]*?)